Building Voice Agents

Session setup

Section titled “Session setup”Audio handling

Section titled “Audio handling”Some transport layers like the default OpenAIRealtimeWebRTC will handle audio input and output

automatically for you. For other transport mechanisms like OpenAIRealtimeWebSocket you will have to

handle session audio yourself:

import { RealtimeAgent, RealtimeSession, TransportLayerAudio,} from '@openai/agents/realtime';

const agent = new RealtimeAgent({ name: 'My agent' });const session = new RealtimeSession(agent);const newlyRecordedAudio = new ArrayBuffer(0);

session.on('audio', (event: TransportLayerAudio) => { // play your audio});

// send new audio to the agentsession.sendAudio(newlyRecordedAudio);When the underlying transport supports it, session.muted reports the current mute state and

session.mute(true | false) toggles microphone capture. OpenAIRealtimeWebSocket does not

implement muting: session.muted returns null and session.mute() throws, so for websocket

setups you should pause capture on your side and stop calling sendAudio() until the microphone

should be live again.

Session configuration

Section titled “Session configuration”You can configure your session by passing additional options to either the RealtimeSession during construction or

when you call connect(...).

import { RealtimeAgent, RealtimeSession } from '@openai/agents/realtime';

const agent = new RealtimeAgent({ name: 'Greeter', instructions: 'Greet the user with cheer and answer questions.',});

const session = new RealtimeSession(agent, { model: 'gpt-realtime', config: { outputModalities: ['text', 'audio'], audio: { input: { format: 'pcm16', transcription: { model: 'gpt-4o-mini-transcribe', }, }, output: { format: 'pcm16', }, }, },});These transport layers allow you to pass any parameter that matches session.

Prefer the newer config shape with outputModalities, audio.input, and audio.output. Legacy

top-level fields such as modalities, inputAudioFormat, outputAudioFormat,

inputAudioTranscription, and turnDetection are still normalized for backwards compatibility,

but new code should use the nested audio structure shown here.

For parameters that are new and don’t have a matching parameter in the RealtimeSessionConfig you can use providerData. Anything passed in providerData will be passed directly as part of the session object.

Additional RealtimeSession options you can set at construction time:

| Option | Type | Purpose |

|---|---|---|

context | TContext | Extra local context merged into the session context. |

historyStoreAudio | boolean | Store audio data in the local history snapshot (disabled by default). |

outputGuardrails | RealtimeOutputGuardrail[] | Output guardrails for the session (see Guardrails). |

outputGuardrailSettings | RealtimeOutputGuardrailSettings | Frequency and behavior for guardrail checks. |

tracingDisabled | boolean | Disable tracing for the session. |

groupId | string | Group traces across sessions or backend runs. |

traceMetadata | Record<string, any> | Custom metadata to attach to session traces. |

workflowName | string | Friendly name for the trace workflow. |

automaticallyTriggerResponseForMcpToolCalls | boolean | Auto-trigger a model response when an MCP tool call completes (default: true). |

toolErrorFormatter | ToolErrorFormatter | Customize tool approval rejection messages returned to the model. |

connect(...) options:

| Option | Type | Purpose |

|---|---|---|

apiKey | string | (() => string | Promise<string>) | API key (or lazy loader) used for this connection. |

model | OpenAIRealtimeModels | string | Optional model override for the transport connection. |

url | string | Optional custom Realtime endpoint URL. |

callId | string | Attach to an existing SIP-initiated call/session. |

Agent capabilities

Section titled “Agent capabilities”Handoffs

Section titled “Handoffs”Similarly to regular agents, you can use handoffs to break your agent into multiple agents and orchestrate between them to improve the performance of your agents and better scope the problem.

import { RealtimeAgent } from '@openai/agents/realtime';

const mathTutorAgent = new RealtimeAgent({ name: 'Math Tutor', handoffDescription: 'Specialist agent for math questions', instructions: 'You provide help with math problems. Explain your reasoning at each step and include examples',});

const agent = new RealtimeAgent({ name: 'Greeter', instructions: 'Greet the user with cheer and answer questions.', handoffs: [mathTutorAgent],});Unlike regular agents, handoffs behave slightly differently for Realtime Agents. When a handoff is performed, the ongoing session will be updated with the new agent configuration. Because of this, the agent automatically has access to the ongoing conversation history and input filters are currently not applied.

Additionally, this means that the voice or model cannot be changed as part of the handoff. You can also only connect to other Realtime Agents. If you need to use a different model, for example a reasoning model like gpt-5-mini, you can use delegation through tools.

Just like regular agents, Realtime Agents can call tools to perform actions. Realtime supports function tools (executed locally) and hosted MCP tools (executed remotely by the Realtime API). You can define a function tool using the same tool() helper you would use for a regular agent.

import { tool, RealtimeAgent } from '@openai/agents/realtime';import { z } from 'zod';

const getWeather = tool({ name: 'get_weather', description: 'Return the weather for a city.', parameters: z.object({ city: z.string() }), async execute({ city }) { return `The weather in ${city} is sunny.`; },});

const weatherAgent = new RealtimeAgent({ name: 'Weather assistant', instructions: 'Answer weather questions.', tools: [getWeather],});Function tools run in the same environment as your RealtimeSession. This means if you are running your session in the browser, the tool executes in the browser. If you need to perform sensitive actions, make an HTTP request to your backend within the tool.

Hosted MCP tools can be configured with hostedMcpTool and are executed remotely. When MCP tool availability changes the session emits mcp_tools_changed. To prevent the session from auto-triggering a model response after MCP tool calls complete, set automaticallyTriggerResponseForMcpToolCalls: false.

The current filtered MCP tool list is also available as session.availableMcpTools. Both that

property and the mcp_tools_changed event reflect only the hosted MCP servers enabled on the

active agent, after applying any allowed_tools filters from the agent configuration.

While the tool is executing the agent will not be able to process new requests from the user. One way to improve the experience is by telling your agent to announce when it is about to execute a tool or say specific phrases to buy the agent some time to execute the tool.

If a function tool should finish without immediately triggering another model response, return backgroundResult(output) from @openai/agents/realtime.

This sends the tool output back to the session while leaving response triggering under your control.

Function tool timeout options (timeoutMs, timeoutBehavior, timeoutErrorFunction) work the same way in Realtime sessions. With the default error_as_result, the timeout message is sent as tool output. With raise_exception, the session emits an error event with ToolTimeoutError and does not send tool output for that call.

Accessing the conversation history

Section titled “Accessing the conversation history”Additionally to the arguments that the agent called a particular tool with, you can also access a snapshot of the current conversation history that is tracked by the Realtime Session. This can be useful if you need to perform a more complex action based on the current state of the conversation or are planning to use tools for delegation.

import { tool, RealtimeContextData, RealtimeItem,} from '@openai/agents/realtime';import { z } from 'zod';

const parameters = z.object({ request: z.string(),});

const refundTool = tool<typeof parameters, RealtimeContextData>({ name: 'Refund Expert', description: 'Evaluate a refund', parameters, execute: async ({ request }, details) => { // The history might not be available const history: RealtimeItem[] = details?.context?.history ?? []; // making your call to process the refund request },});Approval before tool execution

Section titled “Approval before tool execution”If you define your tool with needsApproval: true the agent will emit a tool_approval_requested event before executing the tool.

By listening to this event you can show a UI to the user to approve or reject the tool call.

import { session } from './agent';

session.on('tool_approval_requested', (_context, _agent, request) => { // show a UI to the user to approve or reject the tool call // you can use the `session.approve(...)` or `session.reject(...)` methods to approve or reject the tool call

session.approve(request.approvalItem); // or session.reject(request.rawItem);});Guardrails

Section titled “Guardrails”Guardrails offer a way to monitor whether what the agent has said violated a set of rules and immediately cut off the response. These guardrail checks will be performed based on the transcript of the agent’s response and therefore requires that the text output of your model is enabled (it is enabled by default).

The guardrails that you provide will run asynchronously as a model response is returned, allowing you to cut off the response based a predefined classification trigger, for example “mentions a specific banned word”.

When a guardrail trips the session emits a guardrail_tripped event. The event also provides a details object containing the itemId that triggered the guardrail.

import { RealtimeOutputGuardrail, RealtimeAgent, RealtimeSession } from '@openai/agents/realtime';

const agent = new RealtimeAgent({ name: 'Greeter', instructions: 'Greet the user with cheer and answer questions.',});

const guardrails: RealtimeOutputGuardrail[] = [ { name: 'No mention of Dom', async execute({ agentOutput }) { const domInOutput = agentOutput.includes('Dom'); return { tripwireTriggered: domInOutput, outputInfo: { domInOutput }, }; }, },];

const guardedSession = new RealtimeSession(agent, { outputGuardrails: guardrails,});By default guardrails are run every 100 characters or at the end of the response text has been generated. Since speaking out the text normally takes longer it means that in most cases the guardrail should catch the violation before the user can hear it.

If you want to modify this behavior you can pass a outputGuardrailSettings object to the session.

import { RealtimeAgent, RealtimeSession } from '@openai/agents/realtime';

const agent = new RealtimeAgent({ name: 'Greeter', instructions: 'Greet the user with cheer and answer questions.',});

const guardedSession = new RealtimeSession(agent, { outputGuardrails: [ /*...*/ ], outputGuardrailSettings: { debounceTextLength: 500, // run guardrail every 500 characters or set it to -1 to run it only at the end },});Interaction flow

Section titled “Interaction flow”Turn detection / voice activity detection

Section titled “Turn detection / voice activity detection”The Realtime Session will automatically detect when the user is speaking and trigger new turns using the built-in voice activity detection modes of the Realtime API.

You can change the voice activity detection mode by passing audio.input.turnDetection in the

session config.

import { RealtimeSession } from '@openai/agents/realtime';import { agent } from './agent';

const session = new RealtimeSession(agent, { model: 'gpt-realtime', config: { audio: { input: { turnDetection: { type: 'semantic_vad', eagerness: 'medium', createResponse: true, interruptResponse: true, }, }, }, },});Modifying the turn detection settings can help calibrate unwanted interruptions and dealing with silence. Check out the Realtime API documentation for more details on the different settings

Interruptions

Section titled “Interruptions”When using the built-in voice activity detection, speaking over the agent automatically triggers

the agent to detect and update its context based on what was said. It will also emit an

audio_interrupted event. This can be used to immediately stop all audio playback (only applicable to WebSocket connections).

import { session } from './agent';

session.on('audio_interrupted', () => { // handle local playback interruption});If you want to perform a manual interruption, for example if you want to offer a “stop” button in

your UI, you can call interrupt() manually:

import { session } from './agent';

session.interrupt();// this will still trigger the `audio_interrupted` event for you// to cut off the audio playback when using WebSocketsIn either way, the Realtime Session will handle both interrupting the generation of the agent, truncate its knowledge of what was said to the user, and update the history.

If you are using WebRTC to connect to your agent, it will also clear the audio output. If you are using WebSocket, you will need to handle this yourself by stopping audio playack of whatever has been queued up to be played.

Text input

Section titled “Text input”If you want to send text input to your agent, you can use the sendMessage method on the RealtimeSession.

This can be useful if you want to enable your user to interface in both modalities with the agent, or to provide additional context to the conversation.

import { RealtimeSession, RealtimeAgent } from '@openai/agents/realtime';

const agent = new RealtimeAgent({ name: 'Assistant',});

const session = new RealtimeSession(agent, { model: 'gpt-realtime',});

session.sendMessage('Hello, how are you?');Conversation state and delegation

Section titled “Conversation state and delegation”Conversation history management

Section titled “Conversation history management”The RealtimeSession automatically manages the conversation history in a history property:

You can use this to render the history to the customer or perform additional actions on it. As this

history will constantly change during the course of the conversation you can listen for the history_updated event.

If you want to modify the history, like removing a message entirely or updating its transcript,

you can use the updateHistory method.

import { RealtimeSession, RealtimeAgent } from '@openai/agents/realtime';

const agent = new RealtimeAgent({ name: 'Assistant',});

const session = new RealtimeSession(agent, { model: 'gpt-realtime',});

await session.connect({ apiKey: '<client-api-key>' });

// listening to the history_updated eventsession.on('history_updated', (history) => { // returns the full history of the session console.log(history);});

// Option 1: explicit settingsession.updateHistory([ /* specific history */]);

// Option 2: override based on current state like removing all agent messagessession.updateHistory((currentHistory) => { return currentHistory.filter( (item) => !(item.type === 'message' && item.role === 'assistant'), );});Limitations

Section titled “Limitations”- You can currently not update/change function tool calls after the fact

- Text output in the history requires transcripts and text modalities to be enabled

- Responses that were truncated due to an interruption do not have a transcript

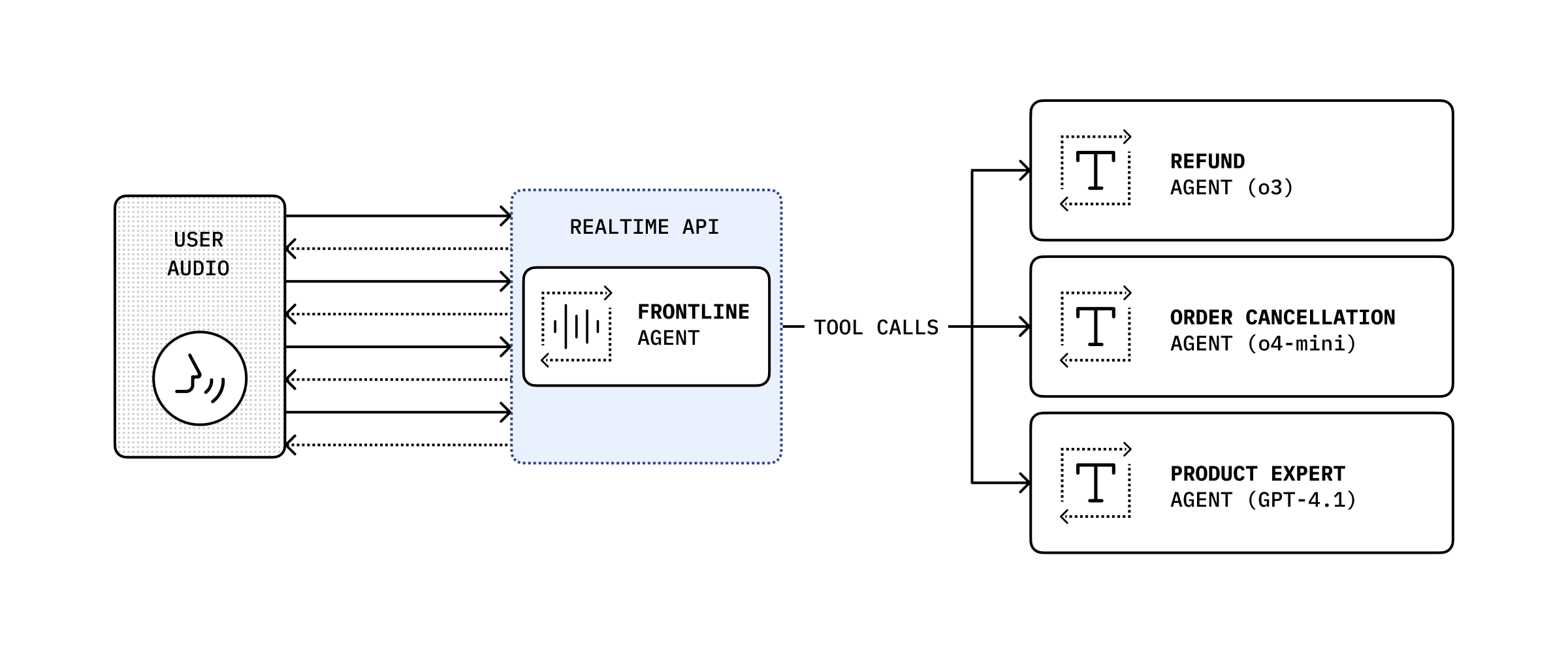

Delegation through tools

Section titled “Delegation through tools”

By combining the conversation history with a tool call, you can delegate the conversation to another backend agent to perform a more complex action and then pass it back as the result to the user.

import { RealtimeAgent, RealtimeContextData, tool,} from '@openai/agents/realtime';import { handleRefundRequest } from './serverAgent';import z from 'zod';

const refundSupervisorParameters = z.object({ request: z.string(),});

const refundSupervisor = tool< typeof refundSupervisorParameters, RealtimeContextData>({ name: 'escalateToRefundSupervisor', description: 'Escalate a refund request to the refund supervisor', parameters: refundSupervisorParameters, execute: async ({ request }, details) => { // This will execute on the server return handleRefundRequest(request, details?.context?.history ?? []); },});

const agent = new RealtimeAgent({ name: 'Customer Support', instructions: 'You are a customer support agent. If you receive any requests for refunds, you need to delegate to your supervisor.', tools: [refundSupervisor],});The code below will then be executed on the server. In this example through a server actions in Next.js.

// This runs on the serverimport 'server-only';

import { Agent, run } from '@openai/agents';import type { RealtimeItem } from '@openai/agents/realtime';import z from 'zod';

const agent = new Agent({ name: 'Refund Expert', instructions: 'You are a refund expert. You are given a request to process a refund and you need to determine if the request is valid.', model: 'gpt-5-mini', outputType: z.object({ reasong: z.string(), refundApproved: z.boolean(), }),});

export async function handleRefundRequest( request: string, history: RealtimeItem[],) { const input = `The user has requested a refund.

The request is: ${request}

Current conversation history:${JSON.stringify(history, null, 2)}`.trim();

const result = await run(agent, input);

return JSON.stringify(result.finalOutput, null, 2);}