Model context protocol (MCP)

The Model context protocol (aka MCP) is a way to provide tools and context to the LLM. From the MCP docs:

MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

The Agents SDK has support for MCP. This enables you to use a wide range of MCP servers to provide tools to your Agents.

MCP servers

Currently, the MCP spec defines three kinds of servers, based on the transport mechanism they use:

- stdio servers run as a subprocess of your application. You can think of them as running "locally".

- HTTP over SSE servers run remotely. You connect to them via a URL.

- Streamable HTTP servers run remotely using the Streamable HTTP transport defined in the MCP spec.

You can use the MCPServerStdio, MCPServerSse, and MCPServerStreamableHttp classes to connect to these servers.

For example, this is how you'd use the official MCP filesystem server.

from agents.run_context import RunContextWrapper

async with MCPServerStdio(

params={

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-filesystem", samples_dir],

}

) as server:

# Note: In practice, you typically add the server to an Agent

# and let the framework handle tool listing automatically.

# Direct calls to list_tools() require run_context and agent parameters.

run_context = RunContextWrapper(context=None)

agent = Agent(name="test", instructions="test")

tools = await server.list_tools(run_context, agent)

Using MCP servers

MCP servers can be added to Agents. The Agents SDK will call list_tools() on the MCP servers each time the Agent is run. This makes the LLM aware of the MCP server's tools. When the LLM calls a tool from an MCP server, the SDK calls call_tool() on that server.

agent=Agent(

name="Assistant",

instructions="Use the tools to achieve the task",

mcp_servers=[mcp_server_1, mcp_server_2]

)

Tool filtering

You can filter which tools are available to your Agent by configuring tool filters on MCP servers. The SDK supports both static and dynamic tool filtering.

Static tool filtering

For simple allow/block lists, you can use static filtering:

from agents.mcp import create_static_tool_filter

# Only expose specific tools from this server

server = MCPServerStdio(

params={

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-filesystem", samples_dir],

},

tool_filter=create_static_tool_filter(

allowed_tool_names=["read_file", "write_file"]

)

)

# Exclude specific tools from this server

server = MCPServerStdio(

params={

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-filesystem", samples_dir],

},

tool_filter=create_static_tool_filter(

blocked_tool_names=["delete_file"]

)

)

When both allowed_tool_names and blocked_tool_names are configured, the processing order is:

1. First apply allowed_tool_names (allowlist) - only keep the specified tools

2. Then apply blocked_tool_names (blocklist) - exclude specified tools from the remaining tools

For example, if you configure allowed_tool_names=["read_file", "write_file", "delete_file"] and blocked_tool_names=["delete_file"], only read_file and write_file tools will be available.

Dynamic tool filtering

For more complex filtering logic, you can use dynamic filters with functions:

from agents.mcp import ToolFilterContext

# Simple synchronous filter

def custom_filter(context: ToolFilterContext, tool) -> bool:

"""Example of a custom tool filter."""

# Filter logic based on tool name patterns

return tool.name.startswith("allowed_prefix")

# Context-aware filter

def context_aware_filter(context: ToolFilterContext, tool) -> bool:

"""Filter tools based on context information."""

# Access agent information

agent_name = context.agent.name

# Access server information

server_name = context.server_name

# Implement your custom filtering logic here

return some_filtering_logic(agent_name, server_name, tool)

# Asynchronous filter

async def async_filter(context: ToolFilterContext, tool) -> bool:

"""Example of an asynchronous filter."""

# Perform async operations if needed

result = await some_async_check(context, tool)

return result

server = MCPServerStdio(

params={

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-filesystem", samples_dir],

},

tool_filter=custom_filter # or context_aware_filter or async_filter

)

The ToolFilterContext provides access to:

- run_context: The current run context

- agent: The agent requesting the tools

- server_name: The name of the MCP server

Caching

Every time an Agent runs, it calls list_tools() on the MCP server. This can be a latency hit, especially if the server is a remote server. To automatically cache the list of tools, you can pass cache_tools_list=True to MCPServerStdio, MCPServerSse, and MCPServerStreamableHttp. You should only do this if you're certain the tool list will not change.

If you want to invalidate the cache, you can call invalidate_tools_cache() on the servers.

End-to-end examples

View complete working examples at examples/mcp.

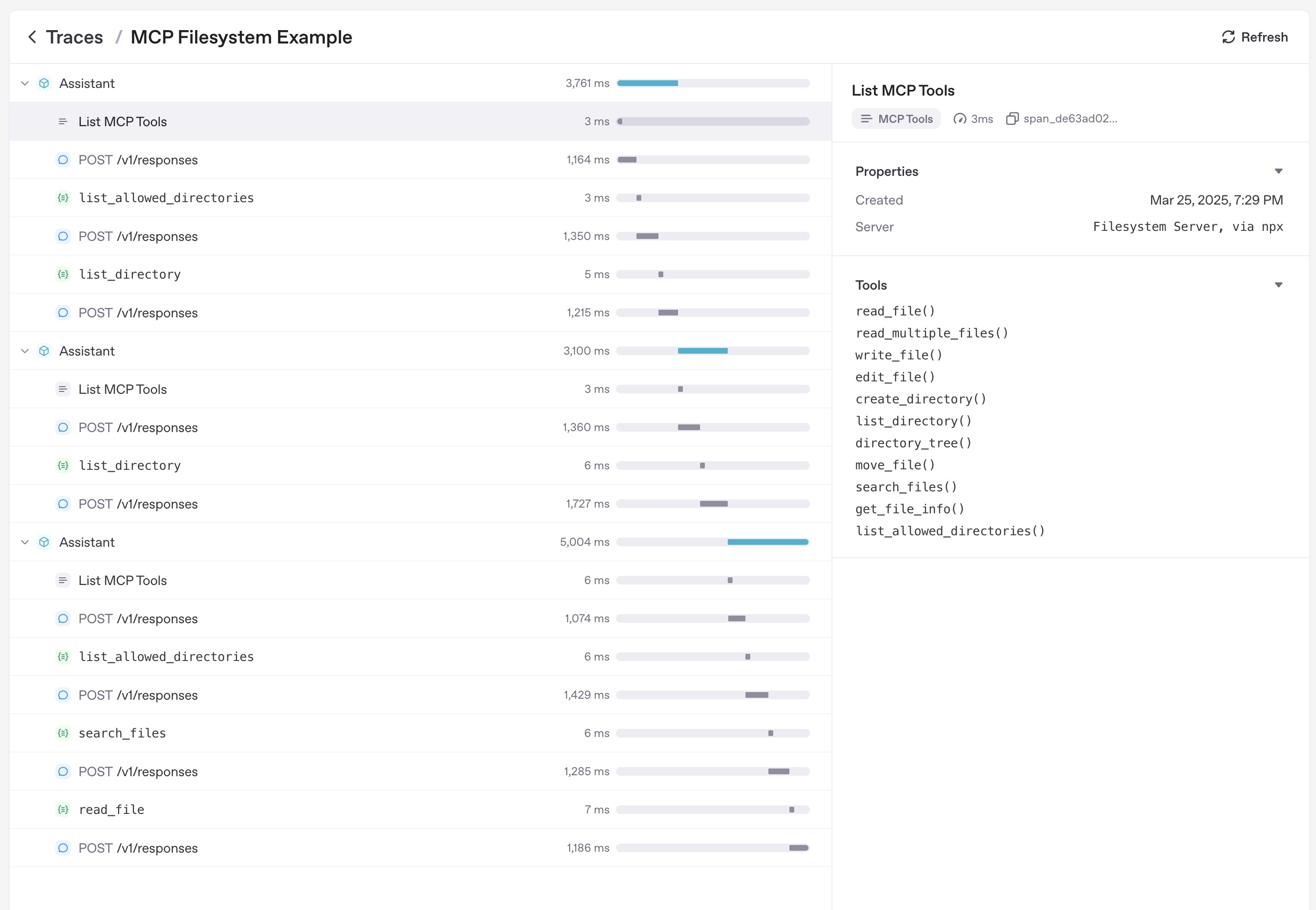

Tracing

Tracing automatically captures MCP operations, including:

- Calls to the MCP server to list tools

- MCP-related info on function calls